Alane Suhr

Publications

*, ** indicate equal contribution.| Preprints | ||

|---|---|---|

| Characterizing language use in a collaborative situated game. Nicholas Tomlin*, Naitian Zhou*, Eve Fleisig, Liangyuan (Circle) Chen, Téa Wright, Lauren Vinh, Laura X. Ma, Seun Eisape, Ellie French, Tingting Du, Tianjiao Zhang, Alexander Koller, and Alane Suhr. | ThreadWeaver: Adaptive threading for efficient parallel reasoning in language models. Long Lian, Sida Wang, Felix Juefei-Xu, Tsu-Jui Fu, Xiuyu Li, Adam Yala, Trevor Darrell, Alane Suhr, Yuandong Tian, and Xi Victoria Lin. | |

| Long chain-of-thought reasoning across languages. Josh Barua, Seun Eisape, Kayo Yin, and Alane Suhr. |

| 2025 | ||

|---|---|---|

|

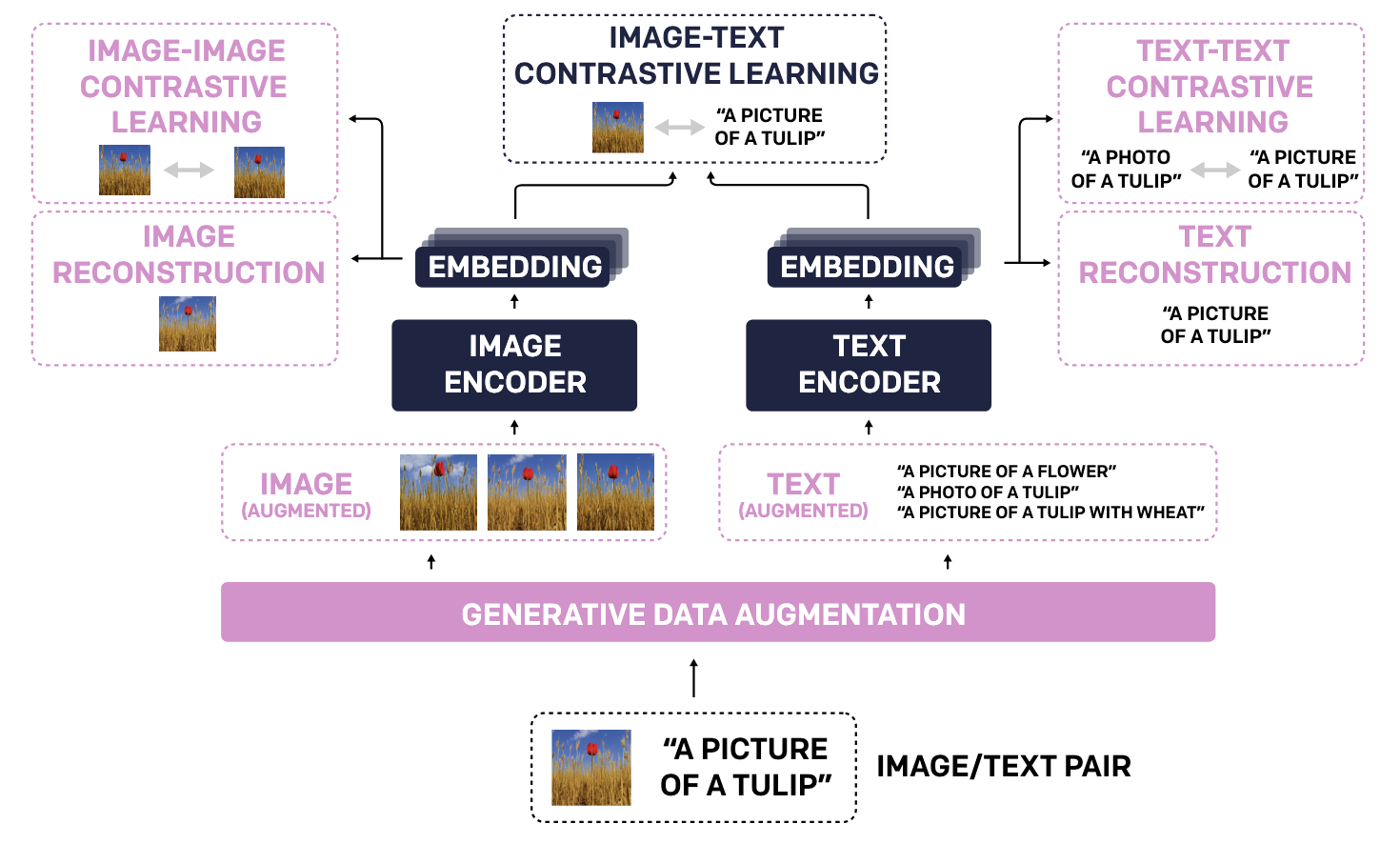

TULIP: Towards unified language-image pretraining. Zineng Tang, Long Lian, Seun Eisape, XuDong Wang, Roei Herzig, Adam Yala, Alane Suhr, Trevor Darrell, and David M. Chan. Workshop on What is Next in Multimodal Foundation Models? (MMFM4) at ICCV. |

web |

|

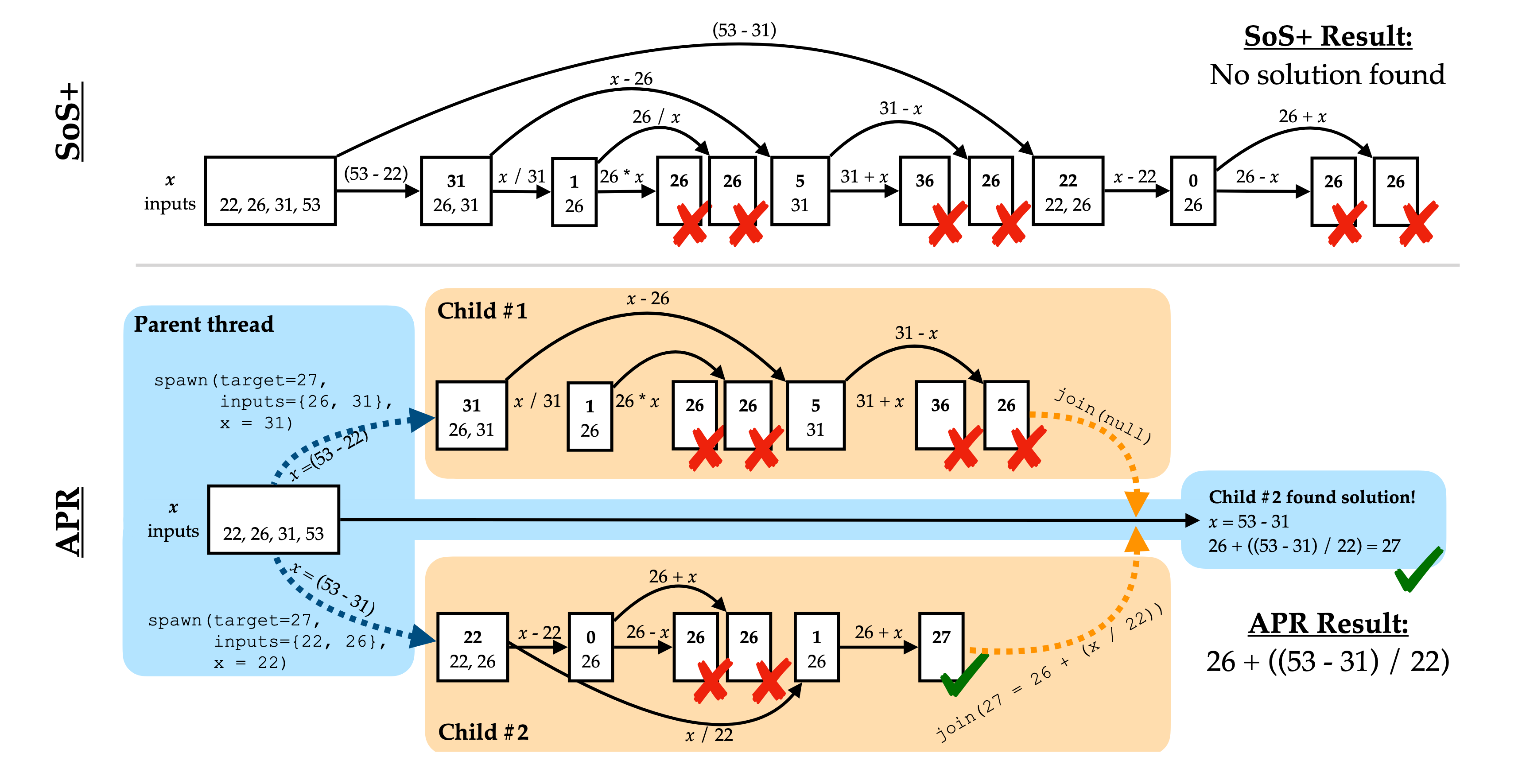

Learning adaptive parallel reasoning with language models. Jiayi Pan*, Xiuyu Li*, Long Lian*, Charlie Snell, Yifei Zhou, Adam Yala, Trevor Darrell, Kurt Keutzer, and Alane Suhr. COLM. Also appeared at the Efficient Systems for Foundation Models (ES-FoMo) Workshop at ICML. |

code |

| Training software engineering agents and verifiers with SWE-Gym. Jiayi Pan*, Xingyao Wang*, Graham Neubig, Navdeep Jaitly, Heng Ji, Alane Suhr**, and Yizhe Zhang**. ICML. Also appeared at the Deep Learning for Code (DL4C) Workshop at ICLR 2025. |

code | |

|

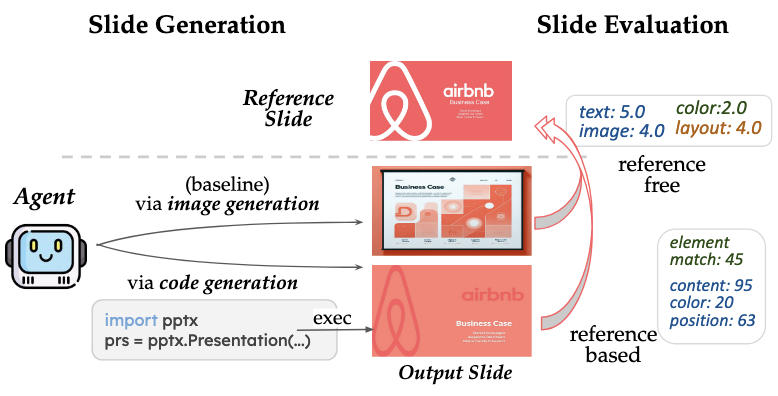

AutoPresent: Designing structured visuals from scratch. Jiaxin Ge*, Zora Zhiruo Wang*, Xuhui Zhou, Yi-Hao Peng, Sanjay Subramanian, Qinyue Tan, Maarten Sap, Alane Suhr**, Daniel Fried**, Graham Neubig**, and Trevor Darrell**. CVPR. | code |

|

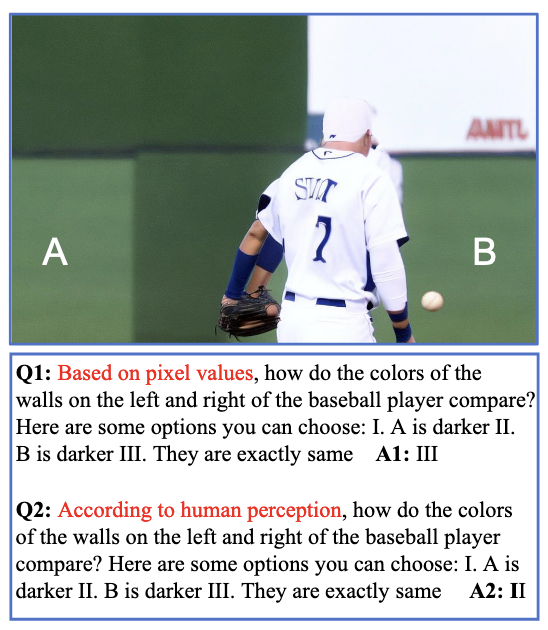

Evaluating model perception of color illusions in photorealistic scenes. Lingjun Mao, Zineng Tang, and Alane Suhr. CVPR. |

| 2024 | ||

|---|---|---|

|

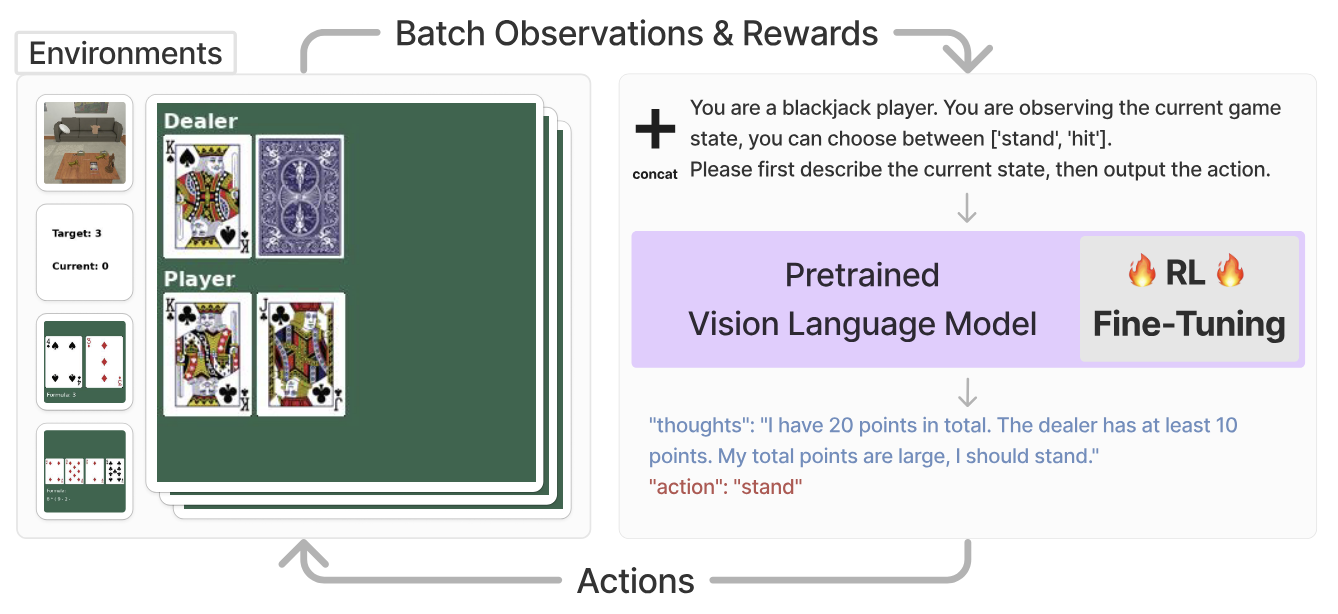

Fine-tuning large vision-language models as decision-making agents via reinforcement learning. Yuexiang Zhai, Hao Bai*, Zipeng Lin*, Jiayi Pan*, Shengbang Tong*, Yifei Zhou*, Alane Suhr, Saining Xie, Yann LeCun, Yi Ma, and Sergey Levine. In NeurIPS. | webcode |

|

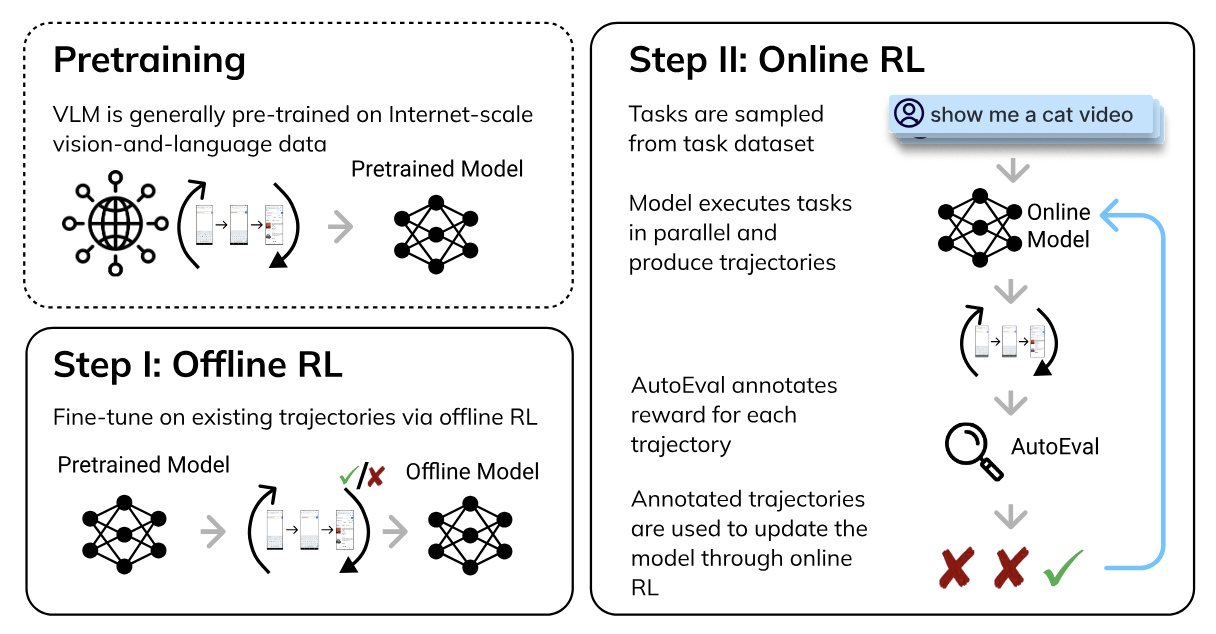

DigiRL: Training in-the-wild device-control agents with autonomous reinforcement learning. Hao Bai*, Yifei Zhou*, Mert Cemri, Jiayi Pan, Alane Suhr, Sergey Levine, and Aviral Kumar. In NeurIPS. | webcode |

|

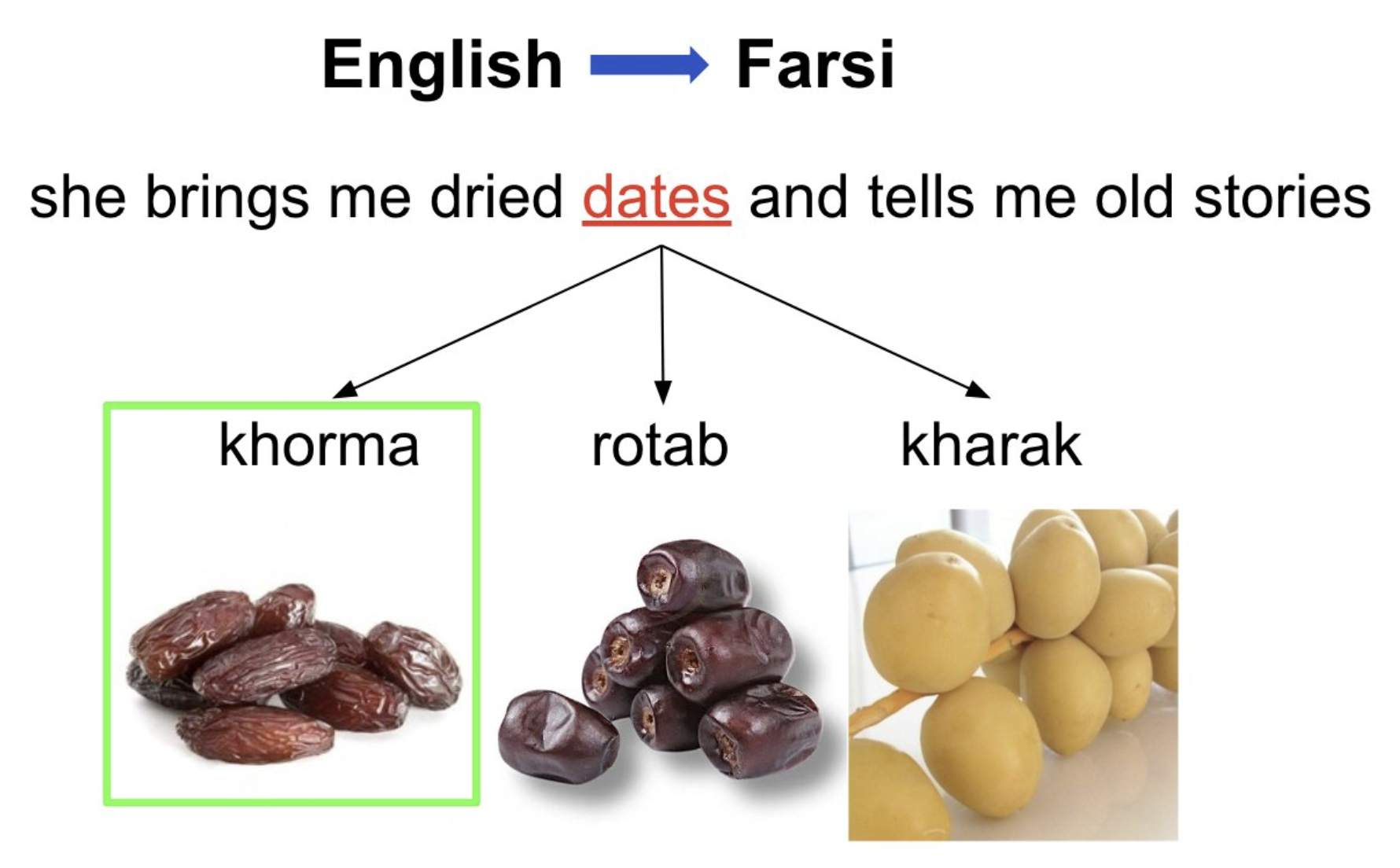

Using language models to disambiguate lexical choices in translation. Josh Barua, Sanjay Subramanian, Kayo Yin, and Alane Suhr. In EMNLP. | |

|

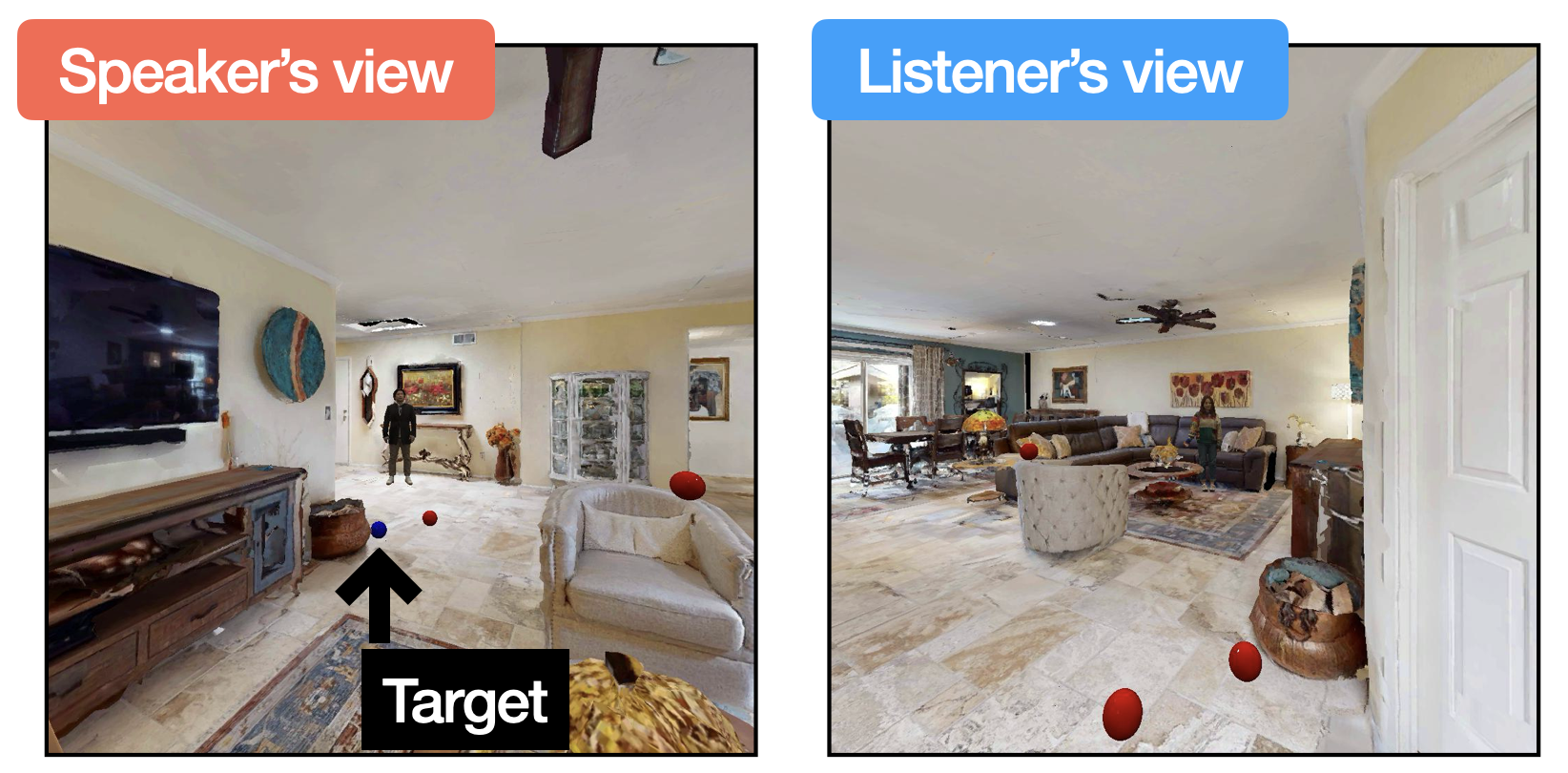

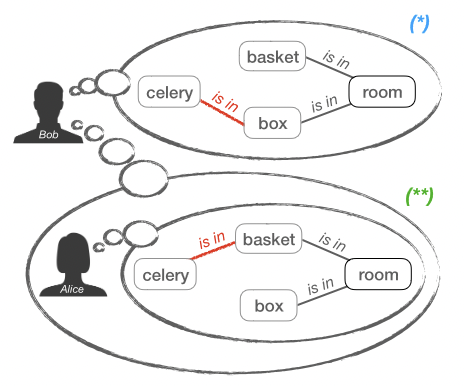

Grounding language in multi-perspective referential communication. Zineng Tang, Lingjun Mao, and Alane Suhr. In EMNLP. | |

| Autonomous evaluation and refinement of digital agents. Jiayi Pan, Yichi Zhang, Nicholas Tomlin, Yifei Zhou, Sergey Levine, and Alane Suhr. Appeard in COLM. Also appeared at MAR Workshop at CVPR 2024 (won best paper)! |

webcode | |

|

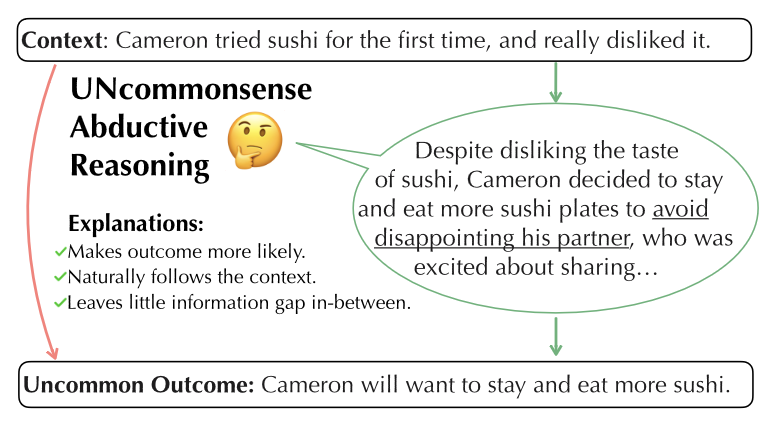

UNcommonsense reasoning: Abductive reasoning about uncommon situations. Wenting Zhao, Justin T. Chiu, Jena D. Hwang, Faeze Brahman, Jack Hessel, Sanjiban Choudhury, Yejin Choi, Xiang Lorraine Li*, and Alane Suhr*. In NAACL. | |

|

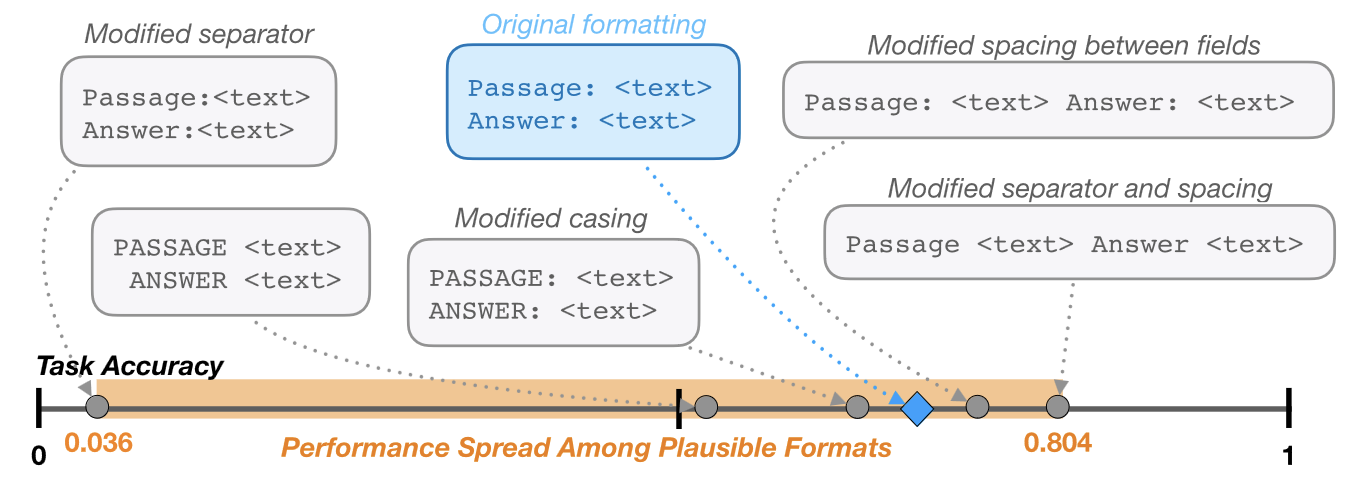

Quantifying language models' sensitivity to spurious features in prompt design or: How I learned to start worrying about prompt formatting. Melanie Sclar, Yejin Choi, Yulia Tsvetkov, and Alane Suhr. In ICLR. | code |

| What's in my big data? Yanai Elazar, Akshita Bhagia, Ian Magnusson, Abhilasha Ravichander, Dustin Schwenk, Alane Suhr, Pete Walsh, Dirk Groeneveld, Luca Soldaini, Sameer Singh, Hannaneh Hajishirzi, Noah A. Smith, and Jesse Dodge. In ICLR. Spotlight |

web |

| 2023 | ||

|---|---|---|

|

We're afraid language models aren't modeling ambiguity. Alisa Liu, Zhaofeng Wu, Julian Michael, Alane Suhr, Peter West, Alexander Koller, Swabha Swayamdipta, Noah A. Smith, and Yejin Choi. In EMNLP. | |

| Continual learning for instruction following from realtime feedback. Alane Suhr and Yoav Artzi. In NeurIPS. Spotlight |

||

| Fine-grained human feedback gives better rewards for language model training. Zeqiu Wu*, Yushi Hu*, Weijia Shi, Nouha Dziri, Alane Suhr, Prithviraj Ammanabrolu, Noah A. Smith, Mari Ostendorf, and Hannaneh Hajishirzi. In NeurIPS. Spotlight |

web | |

|

Do embodied agents dream of pixelated sheep?: Embodied decision making using language guided world modelling. Kolby Nottingham, Prithviraj Ammanabrolu, Alane Suhr, Yejin Choi, Hannaneh Hajishirzi, Sameer Singh, and Roy Fox. In ICML. Also appeared at the Reincarnating RL workshop held at ICLR 2023. |

web |

|

Minding language models' (lack of) theory of mind: A plug-and-play multi-character belief tracker. Melanie Sclar, Sachin Kumar, Peter West, Alane Suhr, Yejin Choi, and Yulia Tsvetkov. In ACL. Outstanding Paper Award Also appeared at the Theory of Mind Workshop held at ICML 2023. |

| 2022 | ||

|---|---|---|

|

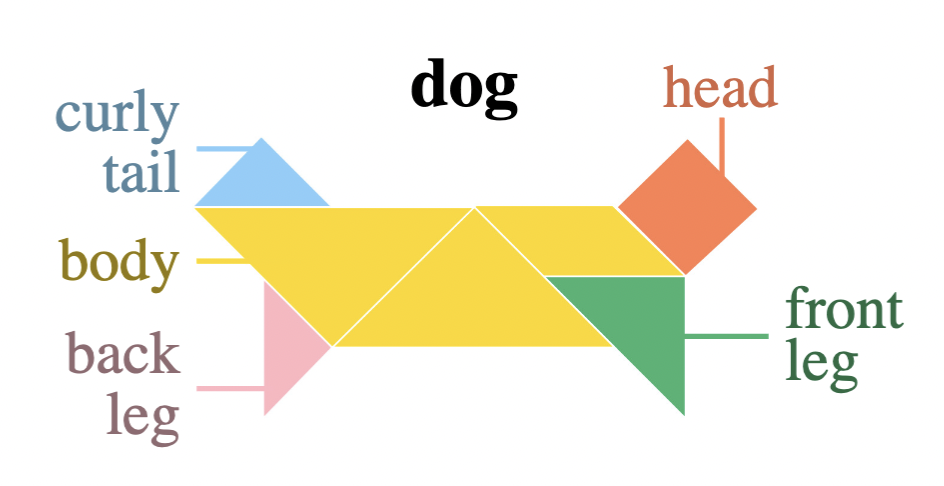

Abstract visual reasoning with tangram shapes. Anya Ji, Noriyuki Kojima*, Noah J. Rush*, Alane Suhr*, Wai Keen Vong, Robert Hawkins, and Yoav Artzi. In EMNLP. Best Long Paper Award |

data |

| 2021 | ||

|---|---|---|

| Analysis of language change in collaborative instruction following. Anna Effenberger, Rhia Singh*, Eva Yan*, Alane Suhr, and Yoav Artzi. In Findings of EMNLP. Also appeared at SCiL 2022. |

code |

|

| Crowdsourcing beyond annotation: Case studies in benchmark data collection. Alane Suhr, Clara Vania, Nikita Nangia, Maarten Sap, Mark Yatskar, Samuel R. Bowman, and Yoav Artzi. Tutorial presented at EMNLP. | ||

| Continual learning for grounded instruction generation by observing human following behavior. Noriyuki Kojima, Alane Suhr, and Yoav Artzi. In TACL. |

code |

| 2020 | ||

|---|---|---|

| Exploring underexplored generalization challenges for cross-database semantic parsing. Alane Suhr, Ming-Wei Chang, Peter Shaw, and Kenton Lee. In ACL. |

code talk |

| 2019 | ||

|---|---|---|

|

Executing instructions in situated collaborative interactions. Alane Suhr, Claudia Yan, Charlotte Schluger*, Stanley Yu*, Hadi Khader**, Marwa Mouallem**, Iris Zhang, and Yoav Artzi. In EMNLP. |

data web |

|

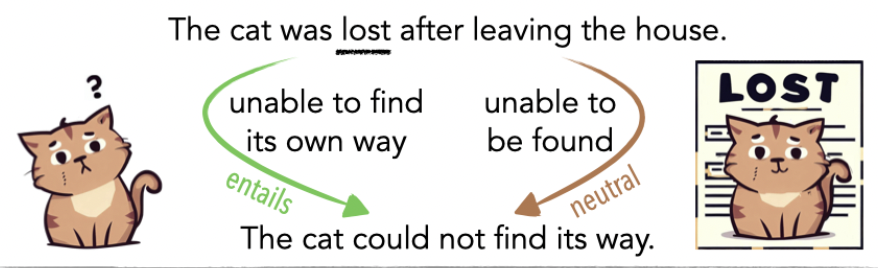

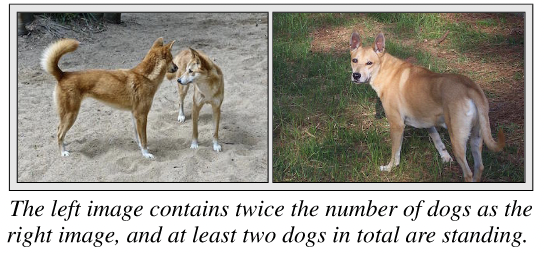

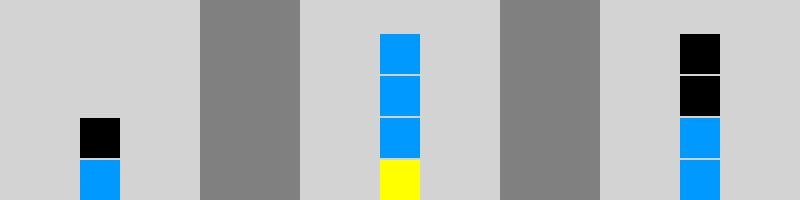

A corpus for reasoning about natural language grounded in photographs. Alane Suhr*, Stephanie Zhou*, Ally Zhang, Iris Zhang, Huajun Bai, and Yoav Artzi. In ACL. Also appeared at the 2017 AAAI Fall Symposium on Natural Communication for Human-Robot Collaboration. |

data web |

|

Touchdown: Natural language navigation and spatial reasoning in visual street environments. Howard Chen, Alane Suhr*, Dipendra Misra, Noah Snavely, and Yoav Artzi. In CVPR. |

code |

| 2018 | ||

|---|---|---|

|

Situated mapping of sequential instructions to actions with single-step reward observation. Alane Suhr and Yoav Artzi. In ACL. |

code talk |

| Neural semantic parsing. Matt Gardner, Pradeep Dasigi, Srinivasan Iyer, Alane Suhr, and Luke Zettlemoyer. Tutorial presented at ACL. | ||

|

Learning to map context-dependent sentences to executable formal queries. Alane Suhr, Srinivasan Iyer, and Yoav Artzi. In NAACL. Outstanding Paper Award |

code talk |

| 2017 | ||

|---|---|---|

|

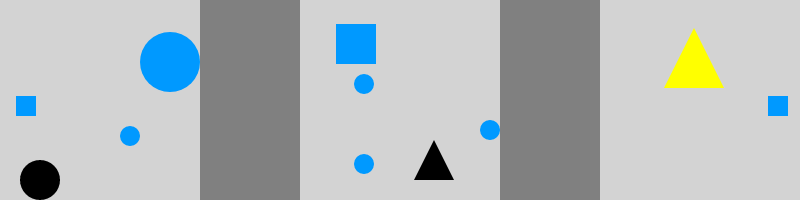

A corpus of natural language for visual reasoning. Alane Suhr, Mike Lewis, James Yeh, and Yoav Artzi. In ACL. Best Resource Paper Award Featured in AI Magazine and NLP Highlights. |

data web talk |